my friends!

I have done it at last

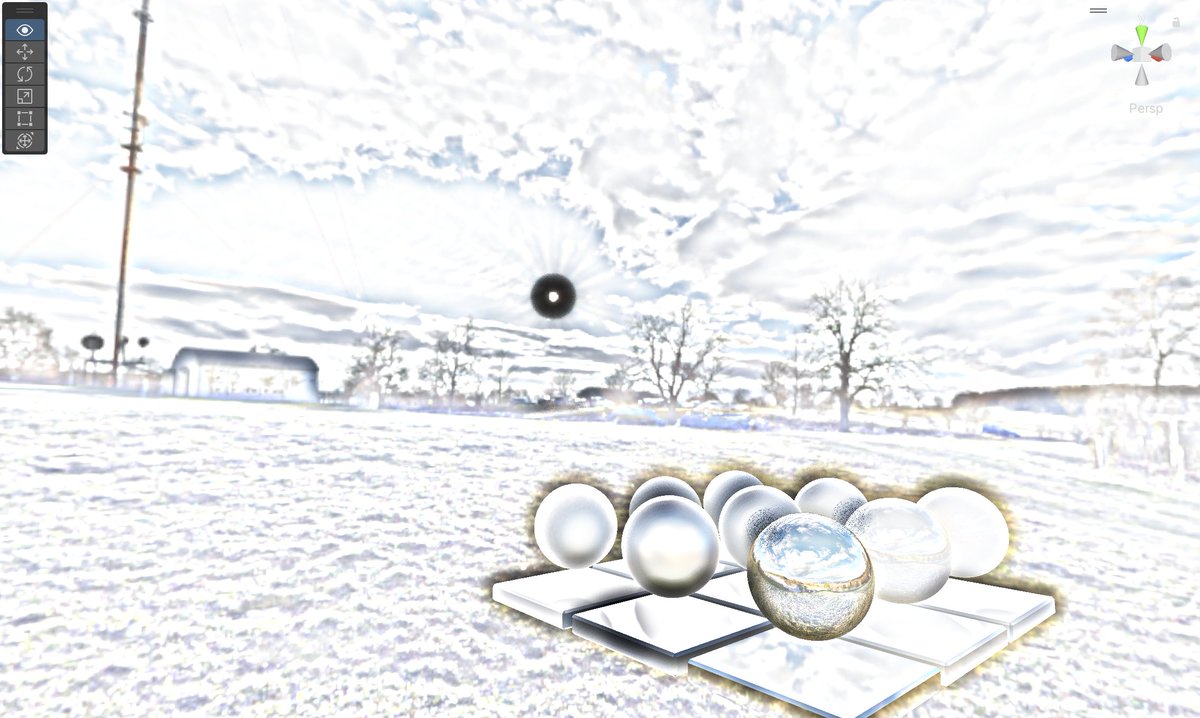

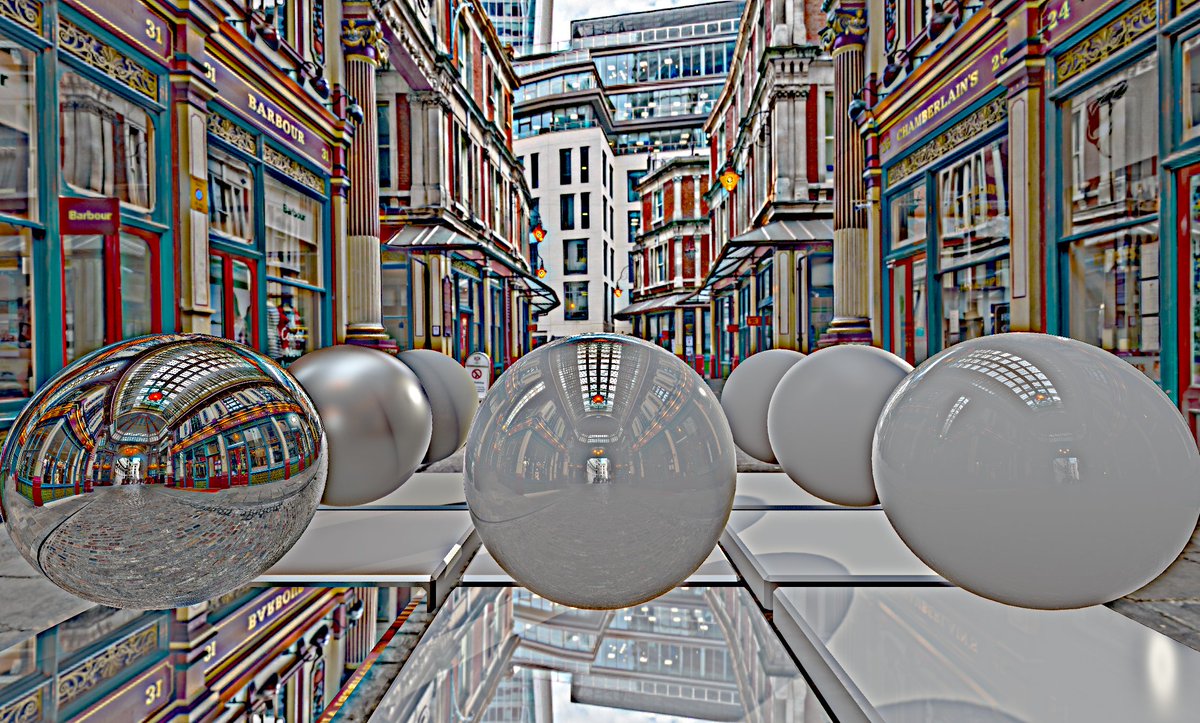

BEHOLD:

REAL TIME SHITTY HDR

so if you, like me, have always been kinda fascinated at a technical level by Shitty HDR, wondering what sort of math could ruin a photograph in such an upsetting way, and what produces all those bizarre halo artifacts:

I have now learned it so you don't have to

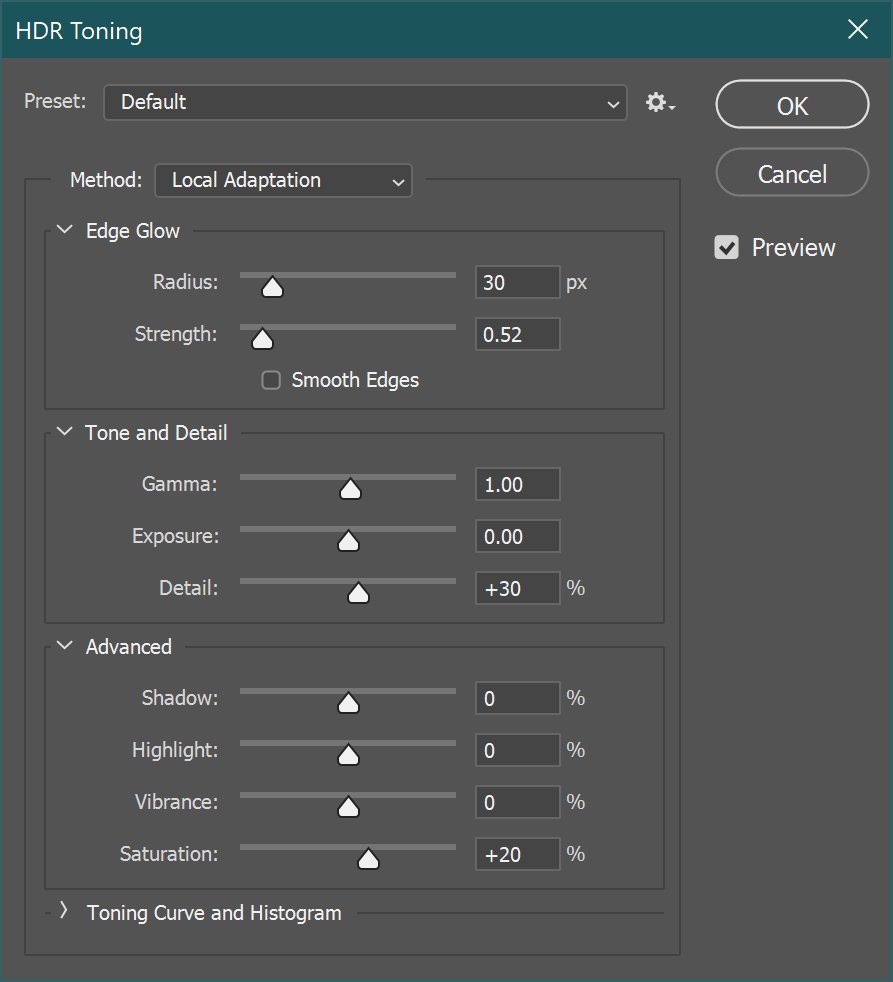

If you want to skip ahead, I'm pretty sure this is the actual approach that Photoshop uses, although the bilateral filter might be written differently:

Adobe added "Local Adaptation" in 2010, which is why Shitty HDR was such a phenomenon circa 2011-13ish

https://people.csail.mit.edu/fredo/PUBLI/Siggraph2002/

Fast Bilateral Filtering for the Display of High-Dynamic-Range Images

Interestingly, it turns out there's not really one specific algorithm for Shitty HDR, which made researching it a pain. It's more like SSAO or TAA, where the basic *approach* is established in a couple papers, but the implementation details vary a ton, and advance constantly

The core idea is just: frequency separation. A paper in 1993 noted that the eye can see detail in both bright and dark regions, and so an eye-like tonemapping operator should preserve high-frequency detail while lowering the impact of large, low-frequency brightness variation

You can also choose to boost the intensity of the high freq component before combining, which can bring out additional detail

if you're familiar with image processing, what I have just described is LITERALLY a sharpening filter, so that's why Shitty HDR looks badly sharpened lol

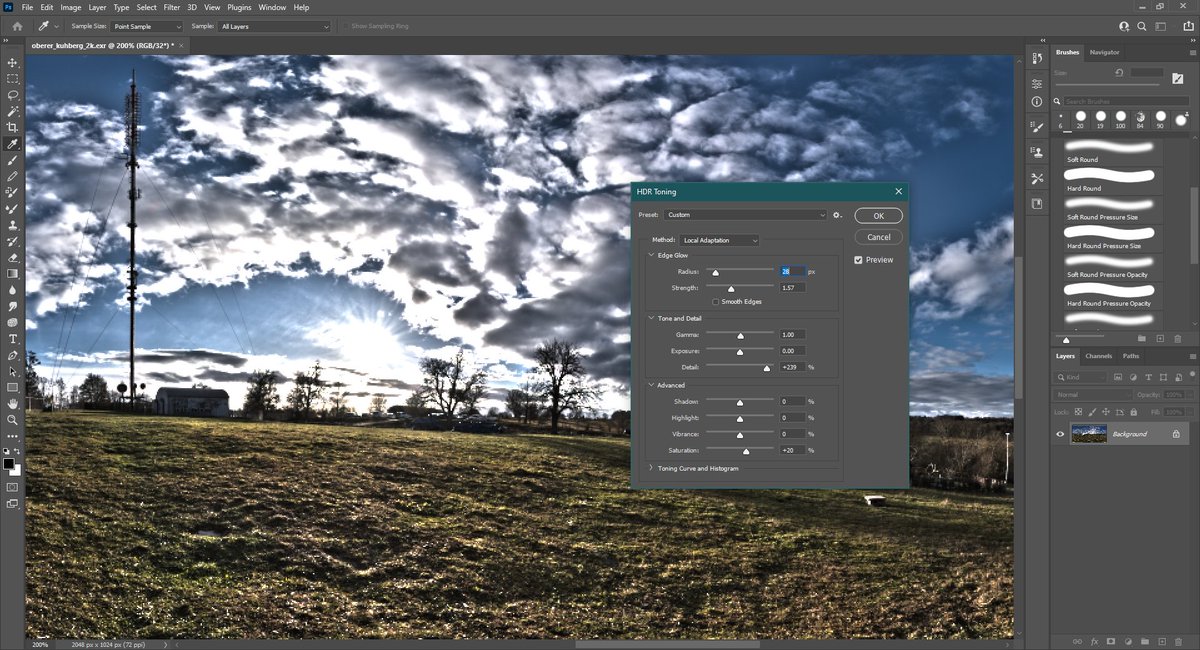

This approach has a problem with severe ringing artifacts. Changes in intensity are surrounded by halos, and the halo's strength is related to the intensity delta

this becomes EXTREMELY severe around large changes, like the edge of the sun's disc, even worse if we boost detail

The "something else" has been an ongoing research thing since the 90s. The core idea of separating surface detail/texture from lighting, then tonemapping only the lighting, is very powerful. There are LOTS of papers proposing different ways of improving this separation

After realizing there are tons of papers on this subject and Shitty HDR isn't just, like, one random filter like Render Clouds or whatever, I was like "Wait, why do all of this? Why's there so much research here when you could just use a tonemap curve like Reinhard or ACES?"

I'm pretty sure the answer is "phones"

Games and film use tonemapping functions bc we want a "filmic" response curve. Film has a high dynamic range but rolls off highlights in an aesthetically pleasant way

This is very much NOT the state of the art for computational photography

Phones can shoot massive dynamic range now, and nobody working on them is interested in emulating film. If you point an iphone at a sunset, or a skyscraper at night, they do everything possible to emulate EYESIGHT

they want the image on the screen to look like what your eyes see

This was also the motivation stated in the papers I read. The whole Shitty HDR approach of splitting lighting apart from texture was explicitly motivated by how eyes work, and how artists can paint intensely realistic images that look nothing like film

this is, unexpectedly, really fascinating?

thinking about ways to approach tonemapping from this direction in a game engine feels like it could produce some really neat stuff

but, back on subject

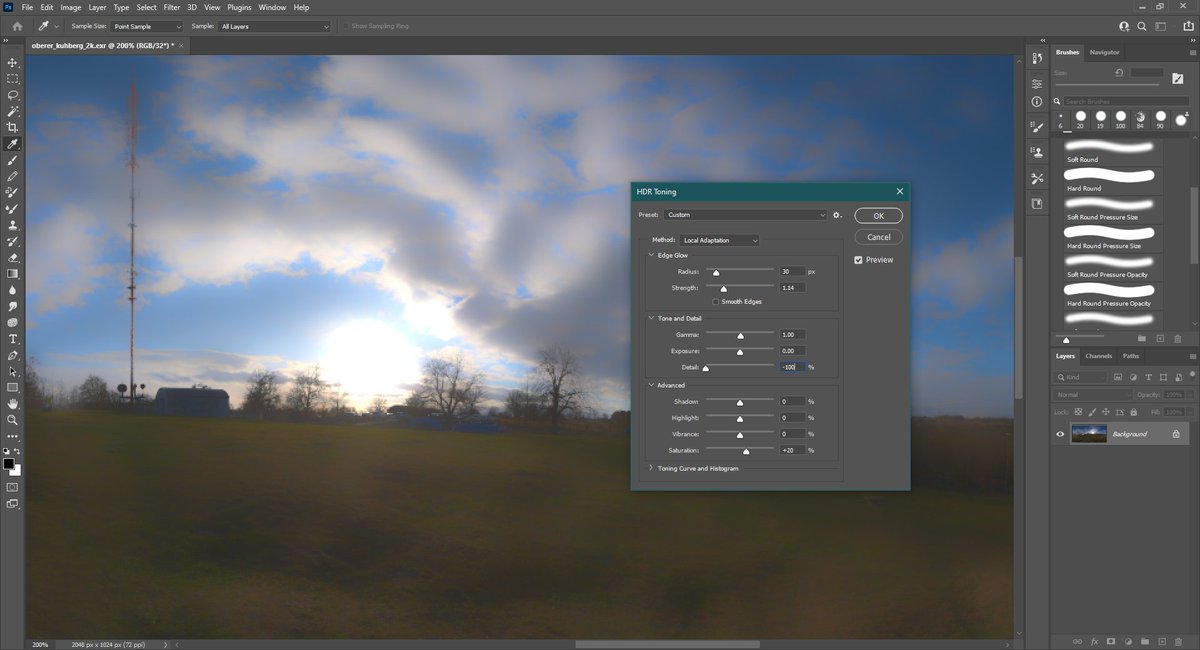

the Durand/Dorsey paper I linked above suggests a way to mitigate the ringing artifacts: Use a bilateral filter for the low frequencies, instead of a blur

As near as I can tell this is what Photoshop does

Also: big thanks to @Atrix256 for explaining how to accelerate the bilateral step

a correct bilateral is super expensive for wide radii, but it turns out if you use a good enough stochastic sampling pattern you can get very nice results while heavily undersampling

@Atrix256 so it turns out the bilateral filter is really the key component of Shitty HDR

high-intensity boundaries like the sun disc don't get blurred, so they don't form halos. Low-intensity boudaries like the edges of the clouds WILL still blur though, and thus get boosted/sharpened

(ignore the black pixels around the sun; that's due to my filter being undersampled to keep the framerate manageable)

also the Smooth Edges checkbox appears to switch to a different filtering algorithm entirely, changing the function of the Strength slider and producing dramatically different results lol

No idea what's going on there tbh lol

This all reveals why there's so much variation in the Shitty HDR aesthetic. The implementation details matter A LOT, even when the approach is the same. Every program that implements Shitty HDR behaves slightly differently

Deciding which steps should use RGB and which should use luminance, which parts should use linear vs log values, etc are mostly up to preference (I'm using RGB bc it looks Shittier)

the tonemapping implementation is whatever you want, and it has a HUGE effect on the final image